TL;DR: We’re open-sourcing GACUA, the world’s first out-of-the-box computer use agent built on Gemini CLI. It’s free to use and you can start it with just a single command. GACUA enhances Gemini’s grounding capability by using a special Image Slicing & Two-Step Grounding method. Moreover, GACUA provides a transparent, human-in-the-loop way for you to automate and control complex tasks.

Today, we are excited to open-source GACUA (Gemini CLI as Computer Use Agent)!

GACUA is the world’s first out-of-the-box computer use agent powered by Gemini CLI.

GACUA extends the core capabilities of Gemini CLI to provide a robust agentic experience. It enables you to:

- 💻 Enjoy Out-of-the-Box Computer Use: Get started with a single command. GACUA provides a free and immediate way to experience computer use, from assisting with gameplay, installing software, and more.

- 🎯 Execute Tasks with High Accuracy: GACUA enhances Gemini 2.5 Pro’s grounding capability through a “Image Slicing + Two-Step Grounding” method.

- 🔬 Gain Step-by-Step Control & Observability: Unlike black-box agents, GACUA offers a transparent, step-by-step execution flow. You can review, accept, or reject each action the agent proposes, giving you full control over the task’s completion.

- 🌐 Enable Remote Operation: You can access your agent from a separate device. The agent runs in its own independent environment, so you no longer have to “fight” with it for mouse and keyboard control while the agent works.

The Technical Journey Behind GACUA

We initially thought that connecting a Computer Use MCP to the Gemini CLI would be enough to handle basic tasks. However, we discovered that Gemini 2.5 Pro’s grounding capabilities were quite limited.

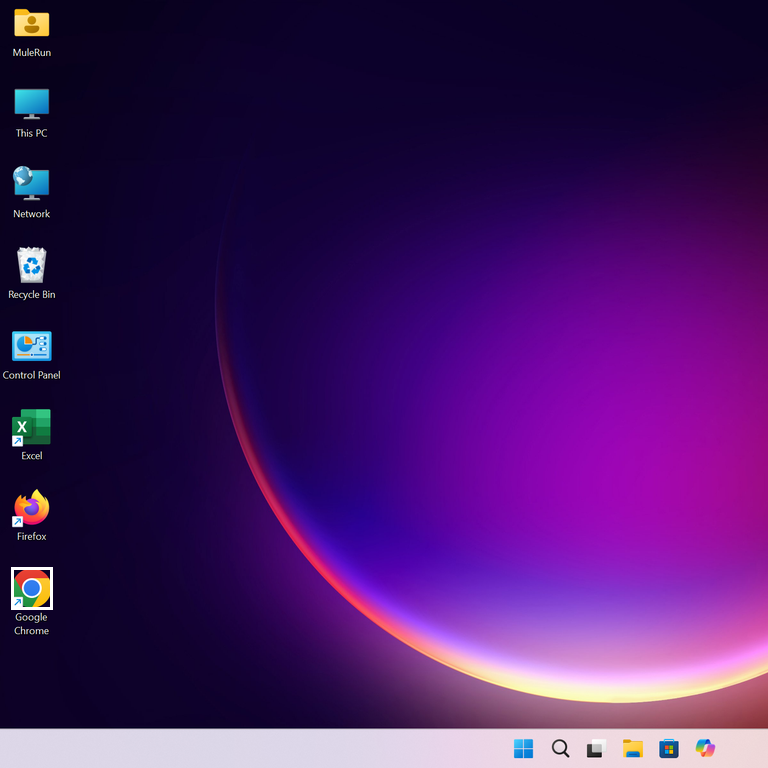

For example, when asked to locate the Chrome icon in a screenshot, Gemini 2.5 Pro’s resulting bounding box was often inaccurate. If the agent were to click the center of this box, it would miss the target.

Detect Chrome in the Image Using Gemini 2.5 Pro

To solve this, we tried various approaches, including prompt tuning and scaling screenshot resolutions, but none were effective.

After extensive experimentation, we found that a combination of Image Slicing & Two-Step Grounding could significantly improve Gemini’s grounding ability.

Image Slicing

Gemini’s default behavior is to tile images into 768 x 768 chunks. To achieve better results, we bypass this by applying a more proper slicing logic to the images, which ensures compatibility with common screen resolutions.

For example, for a 16:9 screenshot, we slice it into three overlapping square tiles, ensuring that the overlap between any two adjacent tiles is more than 50% of their width.

This guarantees that any UI element up to 50% of the screen’s height will appear fully intact in at least one of the tiles.

Three Vertically Cropped Images with Sufficient Overlays

Square 0 |  Square 1 |  Square 2 |

Furthermore, we scale these square tiles to a 768 x 768 resolution before sending them to Gemini. This ensures they are used as direct inputs for Gemini rather than being post-processed after slicing.

Two-Step Grounding

For any operation requiring precise coordinates, we use a two-step method to call models: Plan and Ground.

- The Plan model receives all three 768 x 768 tiles at once and outputs an

image_idand anelement_description.

| |

- The Plan model selects the tile containing the target object from the three images. This selected tile, along with the

element_description, is then passed to the Ground model to generate a precise box_2d (the bounding box) for selecting the element.

Result of Grounding Process

Using this method, the model’s grounding capability is dramatically improved. You can find the reproduction script for this process here.

It’s worth noting that using an element_description has both pros and cons. It can potentially lose information if a UI element is hard to describe. However, it also has two significant benefits:

It forces a slower, more deliberate reasoning process, similar to CoT, by requiring the model to accurately describe an element before locating it.

It greatly improves the agent’s explainability. When a UI command fails, it’s easy to determine whether the agent misunderstood the task (a bad description) or simply failed to find the coordinates (a bad box).

GACUA’s Design Philosophy

When we talked to developers, we kept hearing about two major pain points with current computer use agents: the high cost of entry and their “black box” execution. GACUA was designed to solve these problems:

Low Barrier to Entry: Many effective computer use agents rely on expensive proprietary models (like Claude) or require specialized, locally-run grounding models with high hardware demands. GACUA offers a more accessible alternative. It’s built on the free Gemini CLI and uses our special engineering methods to achieve high-quality grounding, allowing any developer to experience free computer use with a single command.

Transparent Execution: Many current computer use solutions (like Claude Desktop + PyAutoGUI) operate as a ‘black box’, making it hard to understand the agent’s decision-making process. GACUA provides full observability through a web UI, allowing you to see every step of the agent’s Planning and Grounding. Moreover, GAUCA also supports HITL control, letting you “accept” or “reject” each action before it’s executed, which significantly improves task success rates.

Our Thoughts on the Future

Building GACUA wasn’t just about shipping a useful tool; the process also shaped our perspective on the future of computer use agents.

After building and testing for a while, we’ve discovered that computer use is particularly well-suited for the following types of scenarios:

Tasks with a “Knowledge Gap”: Operations that are simple to execute but users don’t know how to do it (e.g., adjusting row height in Excel).

Repetitive Manual Labor: High-frequency, low-value tasks that are perfect for automation (e.g., daily game check-ins, processing unread emails, monitoring product prices).

There is a growing sentiment in the industry that vision-based computer use is an inefficient “robot-pulling-a-cart” approach. The argument is that a fully API-driven world, where LLMs handle text (their native strength), is the superior path (e.g., DOM-based browser agents are more reliable than vision-based ones).

This viewpoint has merit, as Computer Use is still in its early stages. However, we believe a purely API-driven view misses two fundamental points:

The GUI is Already a Universal API: The ideal of a fully API-driven world faces the harsh reality of inconsistent standards and versions. The GUI, however, has evolved over decades into a de facto universal standard for interaction (buttons, menus, etc.). Teaching an agent to master this universal “visual language” is a path worth exploring.

It’s a Necessary Step Towards World Models: Our ultimate vision for agents is for them to interact with the real, physical world, just as they do in science fiction. Vision-based perception and action are indispensable for that future. The computer screen is the most effective and efficient “training ground” we have today to teach an agent how to “see the world and interact with it.”

We see GACUA not just as a practical tool for today’s problems, but as a pragmatic step toward this grander vision.

What’s Next

Curious about GACUA? Give it a try! You can start GACUA with just one command! 🙌

GACUA is an open-source project from MuleRun. MuleRun is the world’s first AI Agent Marketplace, featuring a variety of useful and fun agents. GACUA is our way of sharing the insights we’ve gained while exploring how to build reliable agents.

Got questions or ideas about the future of GACUA or MuleRun? Join us on Discord——we’d love to chat and build together! ❤️